Transaction isolation in Business Central

- adrogin

- Mar 20, 2023

- 10 min read

Updated: Mar 21, 2023

Business Central 22 introduces a new table property ReadIsolation which allows the developers to control transaction isolation in a more granular way compared to previous versions of BC.

It's not something totally new to Business Central. After all, in previous versions we had the Database.CurrentTransactionType procedure which also gave control over the isolation of database reads. Besides, reports and XMLports have the TransactionType property which sets the read type for the whole object. The new table property makes it more granular in the sense that the read isolation can be changed for a specific record variable instead of the whole object or session.

Transaction isolation is a way a database management system handles multiple concurrent transactions accessing the same database objects. Parallel transactions often have to "compete" for resources, attempting to place a lock on the same row or a table, or read data locked by another transaction. One can think of the isolation level as the degree of the tradeoff between consistency and concurrency - the more isolated and consistent the transaction is expected to be, the more negative effect on performance this isolation has.

Transaction isolation levels are defined in the ANSI SQL standard though a specific set of anomalies, or phenomena, that can (or cannot) occur under each isolation level. These anomalies are: dirty reads, non-repeatable reads, and phantoms.

And the transaction isolation levels defined in the ANSI model through the possibility of the anomalies are: Serializable, Repeatable Read, Read Committed, and Read Uncommitted.

Each item in this list is one step down in the level of transaction isolation, from the strictest isolation to very loose control. Each level allows anomalies which are possible in higher, stricter levels, plus adds one of its own. Serializable transaction is the strictest isolation level, where no read anomalies can occur, whilst ReadUncommitted is the least restrictive - it allows all possible kinds of anomalies.

In this post, I will give a few examples of the read anomalies from the Business Central AL perspective and show how these phenomena relate to the ReadIsolation property. There are a few code examples reading and updating the item journal in two parallel transactions. Every test assumes that the DEFAULT batch of the ITEM template of the Item Journal Line table contains one record: a positive adjustment of 10 pcs of the item 1928-S on the EAST location.

Another important thing to keep in mind when running these tests is the BC buffered inserts which can conceal the effects of dirty reads to some extent. To avoid delving into details of buffered inserts here, I simply switched it off in my CustomSettings.config.

<add key="BufferedInsertEnabled" value="false" />Apart from buffered inserts, BC query caching also masks the transaction isolation phenomena. Query caching and its effects on transaction interaction is a topic for another good deep dive, and for now I use SelectLatestVersion() to force BC to reset the cache and run the query again.

Because of these tweaks, the examples below can be considered somewhat purified tests executed in controlled lab conditions rather then code in its natural habitat.

Considering this as a disclaimer, and the post being more theoretical rather than any practical guideline, let's get started.

Read Uncommitted Isolation - Dirty Reads

The first, and the least restrictive isolation level is Read Uncommitted. In Business Central, it corresponds to ReadIsolation::ReadUncommitted. This is the only transaction isolation level that allows dirty reads, and this is the default value for all reads in Business Central.

Dirty read is an effect of a transaction reading modifications done by another transaction which is not yet committed, "work in progress" data that can be inconsistent. The following code sample demonstrates a dirty read of the item journal lines. It is split in two objects the first of which, codeunit "Insert Item Journal Line" runs in a background session and inserts a journal line, while the procedure ReadUncommitted() counts the total quantity of items in the journal.

codeunit 70103 "Insert Item Journal Line"

{

TableNo = "Item Journal Batch";

trigger OnRun()

var

ItemJournalLine: Record "Item Journal Line";

LastLineNo: Integer;

begin

ItemJournalLine.SetRange(

"Journal Template Name", Rec."Journal Template Name");

ItemJournalLine.SetRange("Journal Batch Name", Rec.Name);

if ItemJournalLine.FindLast() then

LastLineNo := ItemJournalLine."Line No.";

ItemJournalLine.Init();

ItemJournalLine.Validate(

"Journal Template Name", Rec."Journal Template Name");

ItemJournalLine.Validate("Journal Batch Name", Rec.Name);

ItemJournalLine.Validate("Line No.", LastLineNo + 10000);

ItemJournalLine.Validate(

"Entry Type",

ItemJournalLine."Entry Type"::"Positive Adjmt.");

ItemJournalLine.Validate("Posting Date", WorkDate());

ItemJournalLine.Validate("Item No.", '1928-S');

ItemJournalLine.Validate("Location Code", 'EAST');

ItemJournalLine.Validate(Quantity, 10);

ItemJournalLine.Insert(true);

Sleep(10000);

Error('');

end;

}This code simply inserts an item journal line and sleeps for a while to imitate some long processing, which errors out in the end. So these 10 lamps can be considered to have never existed, because the procedure fails and never commits the data.

Now the other piece of this code sample is the procedure which runs in another session - I simply call it from an page action trigger. It starts the background session with the journal insert, and calculates the item quantity in the journal lines while the background session is still running, and repeats the same summation after the other session is rolled back.

local procedure ReadUncommitted()

var

ItemJournalBatch: Record "Item Journal Batch";

ItemJournalLine: Record "Item Journal Line";

SessionId: Integer;

ItemQty: array[2] of Decimal;

TimeStamps: array[2] of Time;

begin

ItemJournalBatch.Get('ITEM', 'DEFAULT');

StartSession(

SessionId, Codeunit::"Insert Item Journal Line",

CompanyName, ItemJournalBatch);

Sleep(5000);

Database.SelectLatestVersion();

TimeStamps[1] := Time;

ItemJournalLine.SetRange("Item No.", '1928-S');

ItemJournalLine.ReadIsolation :=

ItemJournalLine.ReadIsolation::ReadUncommitted;

ItemJournalLine.CalcSums(Quantity);

TimeStamps[2] := Time;

ItemQty[1] := ItemJournalLine.Quantity;

Sleep(7000);

Database.SelectLatestVersion();

ItemJournalLine.CalcSums(Quantity);

ItemQty[2] := ItemJournalLine.Quantity;

Message(

'Quantity with background session: %1\\' +

'Quantity after rollback: %2\\' +

'Time to read: %3 ms',

ItemQty[1], ItemQty[2], TimeStamps[2] - TimeStamps[1]);

end;I also use the Sleep function in main session - first to give enough time for the background session to start its transaction and insert the journal line, then to wait until it rolls back the changes. Synchronizing the session idle times, I make sure that the first call of CalcSums() happens while the background transaction is active, and the second one is triggered after the rollback.

Dirty read

Let's run the code and look at the message that it displays after doing all the CalcSums.

This is what a dirty read looks like. The erroneous transaction was rolled back, and its data is supposed to never have existed. But the other session captured this intermediate state and added the transient 10 items to its count. When the same query was repeated after the rollback, the CalsSums returned just 10 items. Note the time it took the function to calculate the amount. It's very fast. We will need this to compare with reads under other isolation levels.

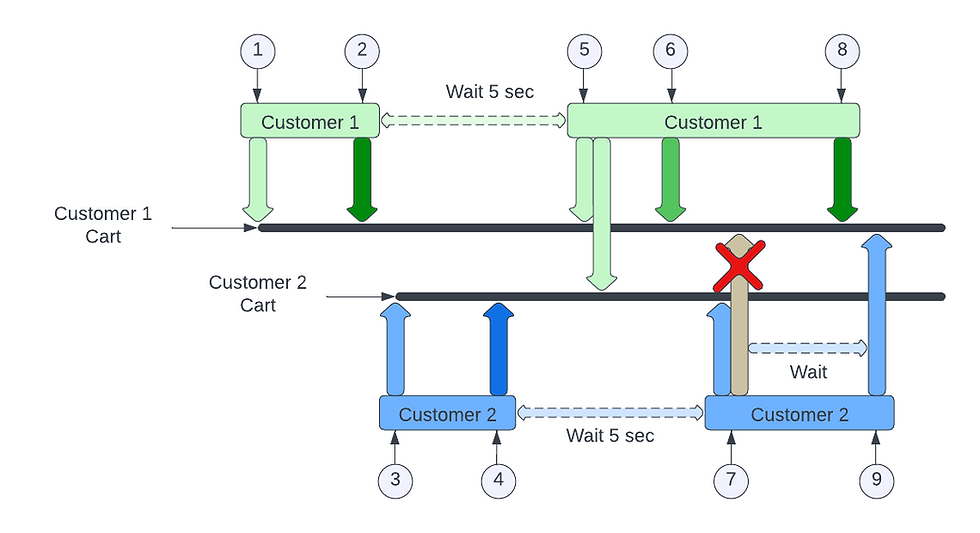

The picture below illustrates the transaction interaction in case of uncommitted reads.

ReadCommitted Isolation - Non-repeatable Reads

Now let's take one step up the isolation hierarchy and change the ReadIsolation property for the table from ReadUncommitted to ReadCommitted:

ItemJournalLine.ReadIsolation :=

ItemJournalLine.ReadIsolation::ReadCommitted;And run the same code with only this little change.

Quantity is consistent now, it does not take the uncommitted journal line into account, but the time it took the CalcSums function to calculate the quantity has skyrocketed to 5.1 seconds. All because the SQL engine has to suspend the transaction and wait for the locking session to complete. This is the illustration of the concurrency/consistency tradeoff which I mentioned above. With ReadCommitted isolation, we gained in data consistently compared to ReadUncommitted, but lost in concurrency.

Update: Note that this example is correct for Business Central on-premises. SaaS environments which run on Azure SQL in fact use Snapshot isolation instead. More in this post: ReadCommitted Isolation in Azure SQL.

Non-repeatable read

So ReadCommitted isolation tightens the concurrency restrictions eliminating dirty reads, but other anomalies - non-repeatable reads and phantoms - are still possible.

The next code example will demonstrate a non-repeatable read under the ReadCommitted isolation. Same as before, this sample include a codeunit which runs in a background session and the foreground procedure that starts another session and reads item journal lines. Now the background session is modifying the single journal line in the DEFAULT batch, updating the quantity from 10 to 5.

codeunit 70104 "Update Item Journal Line"

{

TableNo = "Item Journal Batch";

trigger OnRun()

var

ItemJournalLine: Record "Item Journal Line";

begin

ItemJournalLine.SetRange(

"Journal Template Name", Rec."Journal Template Name");

ItemJournalLine.SetRange("Journal Batch Name", Rec.Name);

ItemJournalLine.FindFirst();

ItemJournalLine.Validate(Quantity, 5);

ItemJournalLine.Modify(true);

end;

}And the main session that counts the quantity in journal lines, starts the background session, and re-queries the quantity while the other transaction is completed. To simplify the test, I don't add time marks here, but it must be obvious that should the background session slow down and take long to complete, the main thread will have to wait for either commit or rollback - just like in the previous example.

local procedure ReadNonRepeatable()

var

ItemJournalBatch: Record "Item Journal Batch";

ItemJournalLine: Record "Item Journal Line";

SessionId: Integer;

ItemQty: array[2] of Decimal;

begin

ItemJournalLine.SetRange("Item No.", '1928-S');

ItemJournalLine.ReadIsolation :=

ItemJournalLine.ReadIsolation::ReadCommitted;

ItemJournalLine.CalcSums(Quantity);

ItemQty[1] := ItemJournalLine.Quantity;

ItemJournalBatch.Get('ITEM', 'DEFAULT');

StartSession(

SessionId, Codeunit::"Update Item Journal Line",

CompanyName, ItemJournalBatch);

Sleep(5000);

Database.SelectLatestVersion();

ItemJournalLine.CalcSums(Quantity);

ItemQty[2] := ItemJournalLine.Quantity;

Message(

'Quantity before background session: %1\\' +

'Quantity after background session: %2\\',

ItemQty[1], ItemQty[2]);

end;Let's run the code and check the message.

This is what a non-repeatable read is. Transaction 2 is allowed to modify rows while the transaction 1 which read these rows is still active. Therefore, when transaction 1 repeats the same query, it may receive different result.

RepeatableRead isolation - Phantoms

Once again, let's repeat the test keeping the same code, just taking the transaction isolation one step higher in the hierarchy - this time from ReadCommitted to RepeatableRead.

And when we run the test, we can see that all reads within the transaction are consistent.

But if we check the event log, we can where the catch is this time. SQL Server logged a warning informing about a long-running query

Action completed successfully, but it took longer than the given threshold. Execution time for the background session now grew from practically zero to 4963 ms.

Take a look at the next picture to see how the transactions interact under the Repeatable Read isolation and why the background session is taking so long to complete.

This time Transaction 2, which is running in the background and modifies the journal line, is not allowed to do the modification until the Transaction 1 which had already retrieved the journal line, completes and releases its lock on the row. Only when Transaction 1 is committed, Transaction 2 can commit as well, so that a new Transaction 3 can see the modified value 5.

Once again, we see how the drive to stricter consistency backfires on performance.

Phantom

The last read anomaly that yet remains uncovered is phantom. The concept of a phantom is a bit trickier than the other two. Dirty reads and non-repeatable reads are specific table rows that two transactions access at the same time with different intent. Phantom is a row that does not exist when a transaction reads data, but is inserted later, so that the same query executed again in the same transaction will read the new row.

Let's run another test, which, I hope, can clarify this somewhat hazy definition.

codeunit 70105 "Insert Phantom Item Jnl. Line"

{

TableNo = "Item Journal Batch";

trigger OnRun()

var

ItemJournalLine: Record "Item Journal Line";

LastLineNo: Integer;

begin

ItemJournalLine.SetRange(

"Journal Template Name", Rec."Journal Template Name");

ItemJournalLine.SetRange("Journal Batch Name", Rec.Name);

if ItemJournalLine.FindLast() then

LastLineNo := ItemJournalLine."Line No.";

ItemJournalLine.Init();

ItemJournalLine.Validate(

"Journal Template Name", Rec."Journal Template Name");

ItemJournalLine.Validate("Journal Batch Name", Rec.Name);

ItemJournalLine.Validate("Line No.", LastLineNo + 10000);

ItemJournalLine.Validate(

"Entry Type",

ItemJournalLine."Entry Type"::"Positive Adjmt.");

ItemJournalLine.Validate("Posting Date", WorkDate());

ItemJournalLine.Validate("Item No.", '1928-S');

ItemJournalLine.Validate("Location Code", 'EAST');

ItemJournalLine.Validate(Quantity, 10);

ItemJournalLine.Insert(true);

end;

}This codeunit which is intended to insert a phantom item journal line, is in fact, the same as another codeunit "Insert Item Jnl. Line" which I declared in the beginning of this post, except that this one does not have any sleeps inside, and it does not error out, but successfully inserts the journal line record and commits.

Procedure ReadPhantom is also pretty much the same as the previous ones. This procedure also calculates the total quantity in item journal lines before starting the background session, and repeats the query after the background transaction is committed.

local procedure ReadPhantom()

var

ItemJournalBatch: Record "Item Journal Batch";

ItemJournalLine: Record "Item Journal Line";

SessionId: Integer;

ItemQty: array[2] of Decimal;

begin

ItemJournalLine.SetRange("Item No.", '1928-S');

ItemJournalLine.ReadIsolation :=

ItemJournalLine.ReadIsolation::RepeatableRead;

ItemJournalLine.CalcSums(Quantity);

ItemQty[1] := ItemJournalLine.Quantity;

ItemJournalBatch.Get('ITEM', 'DEFAULT');

StartSession(

SessionId, Codeunit::"Insert Phantom Item Jnl. Line",

CompanyName, ItemJournalBatch);

Sleep(5000);

Database.SelectLatestVersion();

ItemJournalLine.CalcSums(Quantity);

ItemQty[2] := ItemJournalLine.Quantity;

Message(

'Quantity before background session: %1\\' +

'Quantity after background session: %2', ItemQty[1], ItemQty[2]);

end;Transaction 1 (the foreground) in this example sets the filter on the "Item No." field to calculate the total quantity of the given item, and the second transaction inserts the new line for the same item. This new line inserted in the background session is the phantom, because when the Transaction 1 repeats the same CalcSums statement, the new result is different from the first one.

Transaction 2 changed the query result for Transaction 1 by inserting a record which falls into its filtered range. Note that in no way does the Transaction 2 change any records currently retrieved by the Transaction 1. This new item journal line did not exist by the time when Transaction 2 started (obviously because Transaction 2 just created it). It's just a row which the main session reads when it runs the same query with the same filter range.

Serializable isolation - No anomalies

Serializable, the most restrictive isolation level prevents all the anomalies, including phantom reads, and guarantees read consistency within the transaction, so that the same query executed twice always returns the same result.

Serializable isolation is something I can't demonstrate in Business Central, since there is no value in the ReadIsolation property that corresponds to this isolation level. In older version of Dynamics NAV, and even early releases of BC, serializable reads were used under the Snapshot and Update transaction types, but this has been changed in later releases.

Microsoft Learn portal is a bit ambiguous on this matter:

In earlier versions of Dynamics 365 Business Central, the Snapshot and Update transaction types performed read operations with SERIALIZABLE locking until the table was either modified by a write operation or locked with the LockTable method. In Dynamics 365 Business Central, these transaction types perform read operations with REPEATABLE READ locking until the table is either modified by a write operation or locked with the LockTable method.

It's unclear what happens with the transaction type when LockTable is called or the record is modified. Another test is needed here. But so far, I can demonstrate the effect of a Serializable transaction with a SQL query in the SQL Server Management Studio.

BEGIN TRAN

SELECT SUM("83"."Quantity")

FROM "default".dbo."CRONUS International Ltd_$Item Journal Line" "83"

WITH(SERIALIZABLE)

WHERE ("Item No_"='1928-S') OPTION(FAST 50)

WAITFOR DELAY '00:00:30'

ROLLBACKThis is the same query that the Business Central server issues to sum up the quantity on the item journal filtered by the Item No. I just changed the table hint to SERIALIZABLE. Note that I removed the application ID from the table name for readability. I start this query in Management Studio, and while it's running, trigger the following procedure in BC.

trigger OnAction()

var

ItemJournalBatch: Record "Item Journal Batch";

begin

ItemJournalBatch.Get('ITEM', 'DEFAULT');

Codeunit.Run(

Codeunit::"Insert Phantom Item Jnl. Line", ItemJournalBatch);

end;And when I run this code, after a while I get an error message informing me that the transaction was rolled back.

Important thing to grasp about phantoms, and I will highlight it again, is that the only link between two transaction is the item number. Transaction 2 inserts a new item journal line with Item No. = '1928-S', whilst Transaction 1 reads all records with the same item number.

This completes the overview of transaction isolation levels. You might have noticed that I missed one of the values in the ReadIsolation option set - UpdLock. Well, I left it out intentionally for now. The reason is that UpdLock is not a transaction isolation. I would say that ReadIsolation is not a precisely correct name for the property, because only three - ReadUncommitted, ReadCommitted, and RepeatableRead - are isolation levels. UpdLock is still a valid table hint, but the important difference is that transaction isolation hints only tell the database engine how much isolated from effects of other processes the transaction must be, and the DB engine decides how to achieve this. UpdLock hint, on the other hand, requires a specific lock type to be placed on all rows selected by the SQL statement.

More details on this in the next post. I will write more about isolation levels, how the required isolation is implemented in SQL Server, and the difference between isolation and a lock hint. Stay tuned.

Well explained thanks.