ReadCommitted isolation in Azure SQL

- adrogin

- Mar 21, 2023

- 4 min read

In my previous post Transaction Isolation in Business Central, I introduced a rather long and detailed review of transaction isolation levels and their effect on transaction interaction from the Business Central perspective. That post was more of a theoretical introduction into the ANSI SQL transaction isolation model than any practical guideline. This new (and much shorter) post brings the theory one little step closer to a more practical plane. Now I am going to run one more test in a SaaS environment and reveal the real magic of Azure SQL and show what makes the ReadIsolation property so valuable.

Just like in the previous tests, I'm declaring a codeunit that will be executed in a background session, insert item journal lines, and error out after some wait time. Again, five seconds sleep is intended to imitate some heavy load in the session and a long-running transaction.

codeunit 70103 "Insert Item Journal Line"

{

TableNo = "Item Journal Batch";

trigger OnRun()

var

ItemJournalLine: Record "Item Journal Line";

LineNo: Integer;

I: Integer;

begin

ItemJournalLine.SetRange(

"Journal Template Name", Rec."Journal Template Name");

ItemJournalLine.SetRange("Journal Batch Name", Rec.Name);

if ItemJournalLine.FindLast() then

LineNo := ItemJournalLine."Line No.";

for I := 1 to 20 do begin

ItemJournalLine.Init();

ItemJournalLine.Validate(

"Journal Template Name", Rec."Journal Template Name");

ItemJournalLine.Validate("Journal Batch Name", Rec.Name);

ItemJournalLine.Validate("Line No.", LineNo + I * 10000);

ItemJournalLine.Validate(

"Entry Type",

ItemJournalLine."Entry Type"::"Positive Adjmt.");

ItemJournalLine.Validate("Posting Date", WorkDate());

ItemJournalLine.Validate("Item No.", '1928-S');

ItemJournalLine.Validate("Location Code", 'EAST');

ItemJournalLine.Validate(Quantity, 10);

ItemJournalLine.Insert(true);

end;

Sleep(5000);

Error('');

end;

}I can't switch off the buffered inserts in a SaaS environment as easily as I did in my local container, so I insert a batch of twenty lines instead, to fill the record buffer and make BC flush the buffer and call the Insert statement. This is the only change compared to my previous tests.

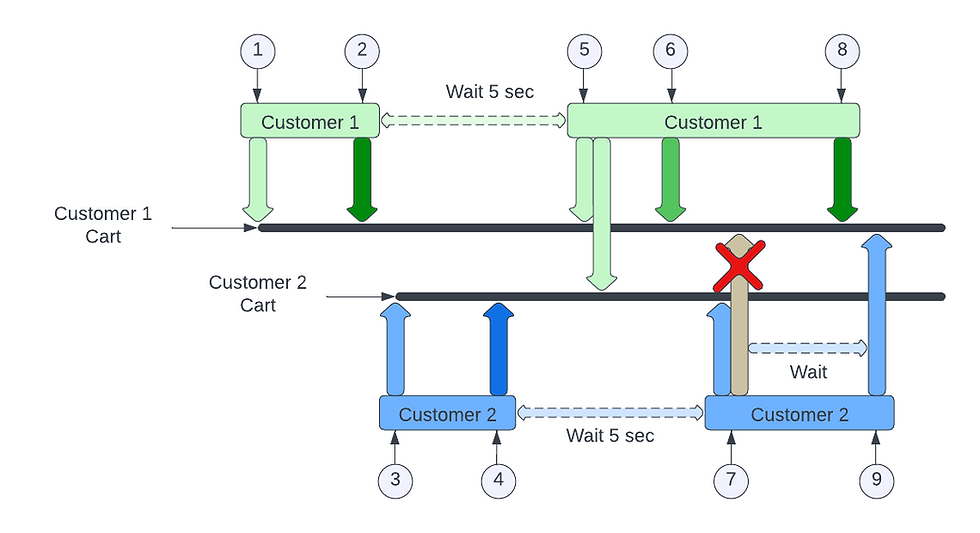

The codeunit will be started from a procedure which can be called from the main user session. I simply call it from a page action trigger. The main session is counting the total number of items in the journal line, filtering on the item '1928-S', while the background session inserts a line with the same item at the same time. The session counting the item quantity runs the query with the ReadUncommitted hint, including the uncommitted lines into the total number.

local procedure ReadUncommitted()

var

ItemJournalBatch: Record "Item Journal Batch";

ItemJournalLine: Record "Item Journal Line";

SessionId: Integer;

ItemQty: array[2] of Decimal;

TimeStamps: array[2] of Time;

begin

ItemJournalBatch.Get('ITEM', 'DEFAULT');

StartSession(

SessionId, Codeunit::"Insert Item Journal Line",

CompanyName, ItemJournalBatch);

Sleep(5000);

Database.SelectLatestVersion();

TimeStamps[1] := Time;

ItemJournalLine.SetRange("Item No.", '1928-S');

ItemJournalLine.ReadIsolation :=

ItemJournalLine.ReadIsolation::ReadUncommitted;

ItemJournalLine.CalcSums(Quantity);

TimeStamps[2] := Time;

ItemQty[1] := ItemJournalLine.Quantity;

Sleep(7000);

Database.SelectLatestVersion();

ItemJournalLine.CalcSums(Quantity);

ItemQty[2] := ItemJournalLine.Quantity;

Message(

'Quantity with background session: %1\\' +

'Quantity after rollback: %2\\' +

'Time to read: %3 ms',

ItemQty[1], ItemQty[2], TimeStamps[2] - TimeStamps[1]);

end;When this code is executed, we see the example of a dirty read - the number includes the lines which were not committed and rolled back.

Let's now follow the same testing procedure and change the ReadIsolation for the item journal line record from ReadUncommitted to ReadCommitted.

ItemJournalLine.ReadIsolation :=

ItemJournalLine.ReadIsolation::ReadUncommitted;Run the code now and have a look at the message box.

If you read my previous post where I was running the same code with ReadUncommitted and ReadCommitted hints, you might remember that enabling the ReadCommitted isolation slowed it down dramatically because the transaction reading the data had to wait for the background session to rollback. But now it's totally different!

I was stressing a few times on the consistency/concurrency tradeoff of the transaction isolation, repeating the statement that stricter isolation levels backfire on performance. In this example, we see that the query executed under the more restrictive ReadCommitted isolation produced more consistent result, but did not lose anything in terms of performance.

This is possible thanks to the READ_COMMITTED_SNAPSHOT isolation in SQL server, which is not one of the isolation levels defined in the ANSI SQL standard. Snapshot isolation is an alternative method of managing concurrent transactions implemented in Microsoft SQL Server. This method is often referred to as optimistic concurrency (remember this term from old NAV days?), as opposed to ANSI SQL model's pessimistic concurrency.

With the Snapshot isolation enabled, SQL Server uses row versioning instead of row locks to ensure data consistency throughout the transaction. So, practically, multiple transactions reading and updating data at the same time, operate on separate copies of the same rows, and do not interfere with each other.

When the snapshot isolation is enabled, SQL Server creates a new version of a modified row instead of locking it. Row versions are numbered, and each transaction only sees versions of the row which existed at the time of the transaction start, any versions created later are hidden from the transaction. When the transaction commits the modified row, the database engine verifies that no conflicting changes have occurred on the same row and saves the new version. Or returns an error if a conflicting change did happen.

As mentioned in this Microsoft Learn article, READ_COMMITTED_SNAPSHOT option is enabled by default in Azure SQL databases (unlike on-prem SQL Server, where this feature must be enabled explicitly), and now Business Central SaaS environments can unleash the full power of the snapshot isolation. So far, in the preview environment of Business Central, the default read isolation is the usual ReadUncommitted, but going forward, ReadCommitted is supposed to become the default isolation option, which means that the possibility of dirty reads will be eliminated in the Busines Central application with little to no impact on performance.

Does this sound too optimistic? Well, maybe. There is no bulletproof solution for every case, and there are certain trade-off even for the snapshot isolation, which I'm going to contemplate on in the following posts.

Comments